We added a tag system to entities. This allows you to tag each entity with an unlimited number of networked strings. Like classes in html/css, this is incredibly simple and incredibly powerful.

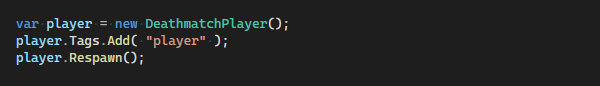

Here's the simplest example, tagging a player with the "player" tag. Now every system in the game will be able to test for that flag and know it's a player.

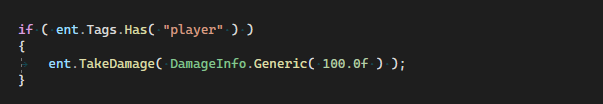

Generic checks like this are useful in s&box because our Pawn might just be a bare entity, it's not necessarily got a common Player base class we can test for.

But with this system it's easy to determine if that entity should be treated as a player, or an npc, or a grenade etc without checking for classes directly.

Permissions

The nice thing about this tag system is that it can be used for any purpose. I could imagine that we support things like "is-admin" and "can-noclip" as a simple permission system.

Collision Groups

We haven't really gotten anywhere with making collision groups useful and not suck yet, so this is a foot in the door to that problem. Maybe we could collide based on tags?

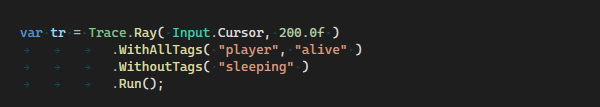

An example of this is above. You can filter your traces by tag, which to me seems a lot simpler to understand than fighting with with collision groups.

Future

I think of things like setting up triggers in Hammer, and it has options for whether it should trigger on npcs and players etc. We can change that now so you can just put tags in to require and tags in to ignore. That'll simplify it while also making it more configurable.

This is a feature I'm hoping to find more uses for in the future.

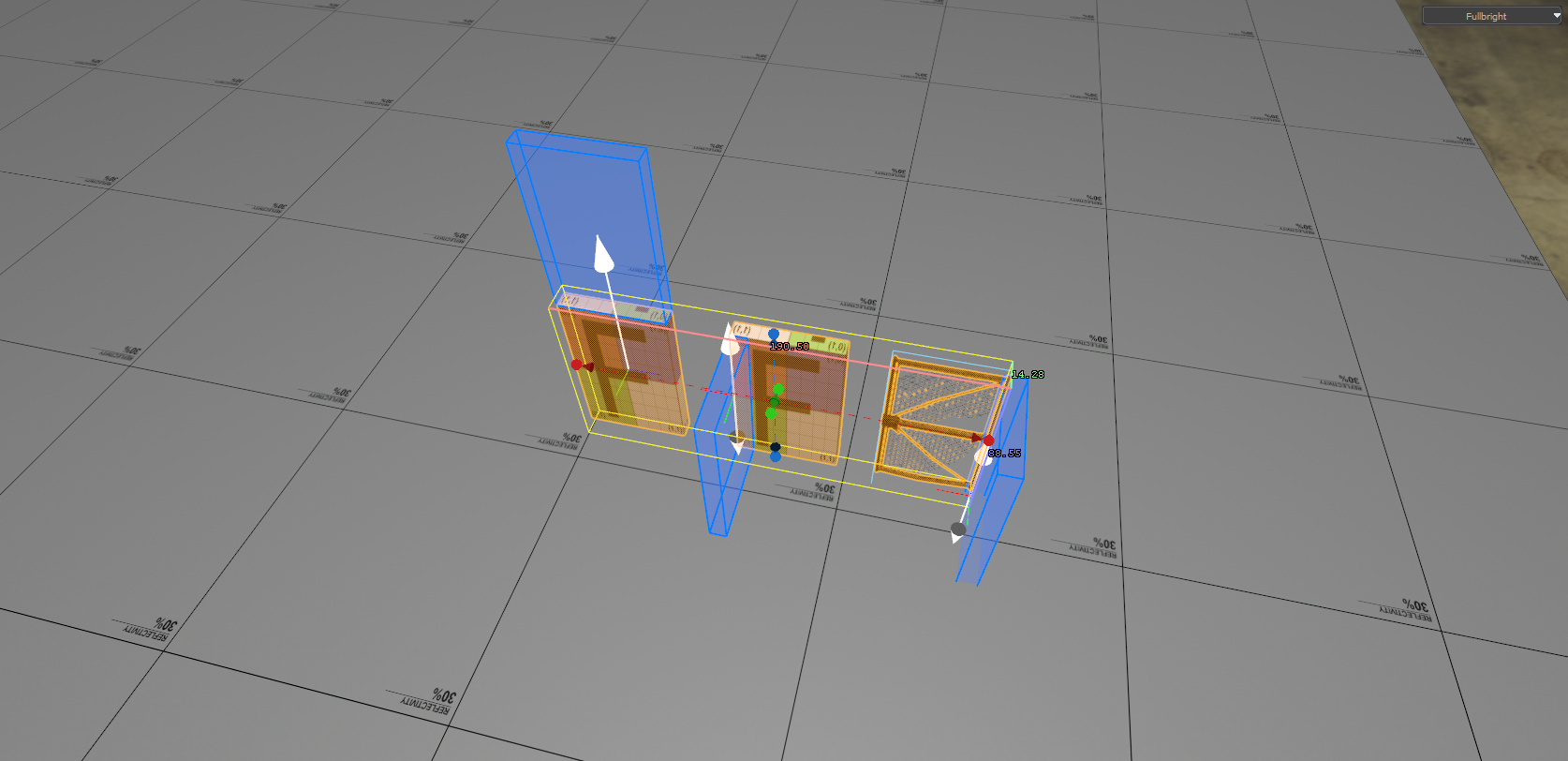

Our intention is to eventually allow coders to add their own Helpers and Tools to Hammer - but that's a while away.

Our intention is to eventually allow coders to add their own Helpers and Tools to Hammer - but that's a while away.